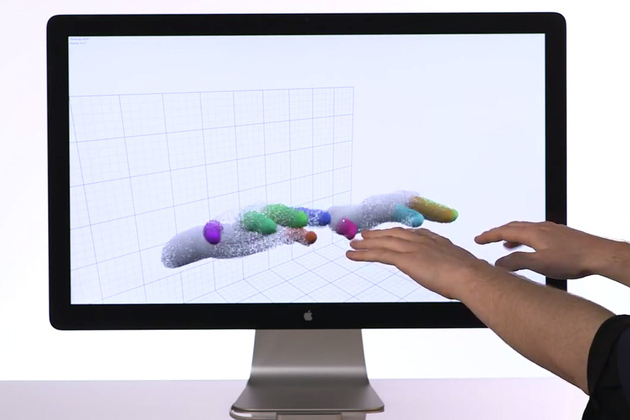

5 Reasons Embedded Systems Designers Should Embrace HCI

As an embedded software engineer who is interested in bringing HCI design practice to embedded devices, I often hear people say, “Oh but we don’t have a display on our device, so I’m not sure we need that”. Somehow, the embedded systems community seems to have decided that well designed interactions only apply to software …

5 Reasons Embedded Systems Designers Should Embrace HCI Read More »