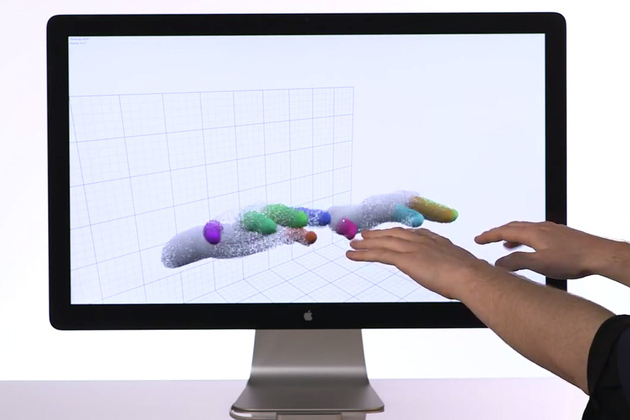

Goodbye Touch! Hello Post-Touch!

Touch is slick and easy but it’s not for everything. And it’s already going out of style. The new kid in town is a natural user interface, Post-Touch – an interface that goes past the requirement of touch and can detect gesture usually through a near-field depth camera.