The second creative idea attacking screen size limitations comes from Christian Holz and Patrick Baudisch at the Hasso Plattner Institute in Berlin. They contend that the problem is not our fat fingers, but the lack of intelligence used to detect the target of the touch. Think of it this way, sometimes you click a button with the pad of your finger, sometimes with the tip. Every time you do either, you are likely off the target by a certain amount. But currently, touchscreens have no way of telling which part of your finger you used and don’t attempt to compensate for how far off you might be. They just use the center of the area of contact, which is quite inaccurate.

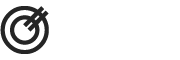

Holz and Baudisch ran some experiments showing that users typically hit a variety of different points when trying to touch a target as shown in the image labeled (a). As you can see, the center of the areas they touched (solid ovals) were not on target but tended to be offset from the target. On the other hand, the dashed oval shows that the offsets are similar and could be intelligently compensated for.

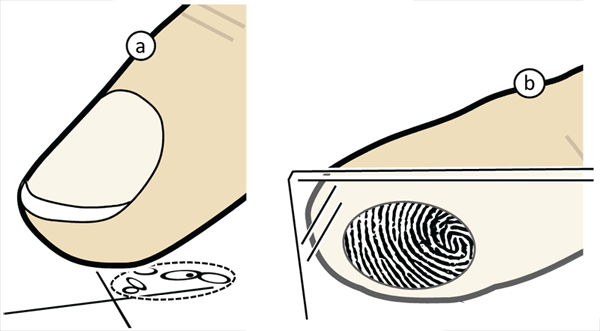

How do we do this? First, they propose using a Generalized Perceived Input Model to compensate for the offset. Then, using a touchscreen with the sensitivity of a fingerprint detector, we can detect the pose of the finger using the print as shown in (b). With that information, we can make more intelligent decisions about what the target of the touch was.

The more accurately we can detect touches, the smaller we can make our screens while still being usable. Using the new model and fingerprint detection, Holz and Baudisch can obtain 1.8 times higher accuracy than capacitive sensing. In addition, it enables new interactions that can detect new gestures such as rolling and pointing. This is one more step toward smaller yet more intuitive touchscreens and fewer missed buttons.